PanEcho©

Complete AI-enabled Echocardiography Interpretation with Multi-Task Deep Learning

About

PanEcho is a view-agnostic, multi-task model that performs 39 reporting tasks from echocardiogram videos. The model consists of an image encoder, temporal frame Transformer, and task-specific output heads. Since PanEcho is view-agnostic, multi-view echocardiography can be leveraged to integrate information across views. At test time, predictions are aggregated across echocardiograms acquired during the same study to generate study-level predictions for each task.

Gregory Holste

UT Austin

Evangelos K. Oikonomou

Yale School of Medicine

Rohan Khera

Yale School of Medicine

Zhangyang Wang

UT Austin

Abstract

Echocardiography is a mainstay of cardiovascular care offering non-invasive, low-cost, increasingly portable technology to characterize cardiac structure and function. Artificial intelligence (AI) has shown promise in automating aspects of medical image interpretation, but its applications in echocardiography have been limited to single views and isolated pathologies. To bridge this gap, we present PanEcho, a view-agnostic, multi-task deep learning model capable of simultaneously performing 39 diagnostic inference tasks from multi-view echocardiography. PanEcho was trained on >1 million echocardiographic videos with broad external validation across an internal temporally distinct and two external geographically distinct sets. It achieved a median area under the receiver operating characteristic curve (AUROC) of 0.91 across 18 diverse classification tasks and normalized mean absolute error (MAE) of 0.13 across 21 measurement tasks spanning chamber size and function, vascular dimensions, and valvular assessment. PanEcho accurately estimates left ventricular (LV) ejection fraction (MAE: 4.4% internal; 5.5% external) and detects moderate or greater LV dilation (AUROC: 0.95 internal; 0.98 external) and systolic dysfunction (AUC: 0.98 internal; 0.94 external), severe aortic stenosis (AUROC: 0.99), among others. PanEcho is a uniquely view-agnostic, multi-task, open-source model that enables state-of-the-art echocardiographic interpretation across complete and limited studies, serving as an efficient echocardiographic foundation model.

Model Architecture

PanEcho is a view-agnostic, multi-task model that performs 39 reporting tasks from echocardiogram videos. The model consists of an image encoder, temporal frame Transformer, and task-specific output heads. Since PanEcho is view-agnostic, multi-view echocardiography can be leveraged to integrate information across views. At test time, predictions are aggregated across echocardiograms acquired during the same study to generate study-level predictions for each task.

Multi-Task Evaluation

Classification (left) and regression (right) performance of PanEcho in a temporally held-out test set. * = moderate or greater; ^ = severe

External Validation

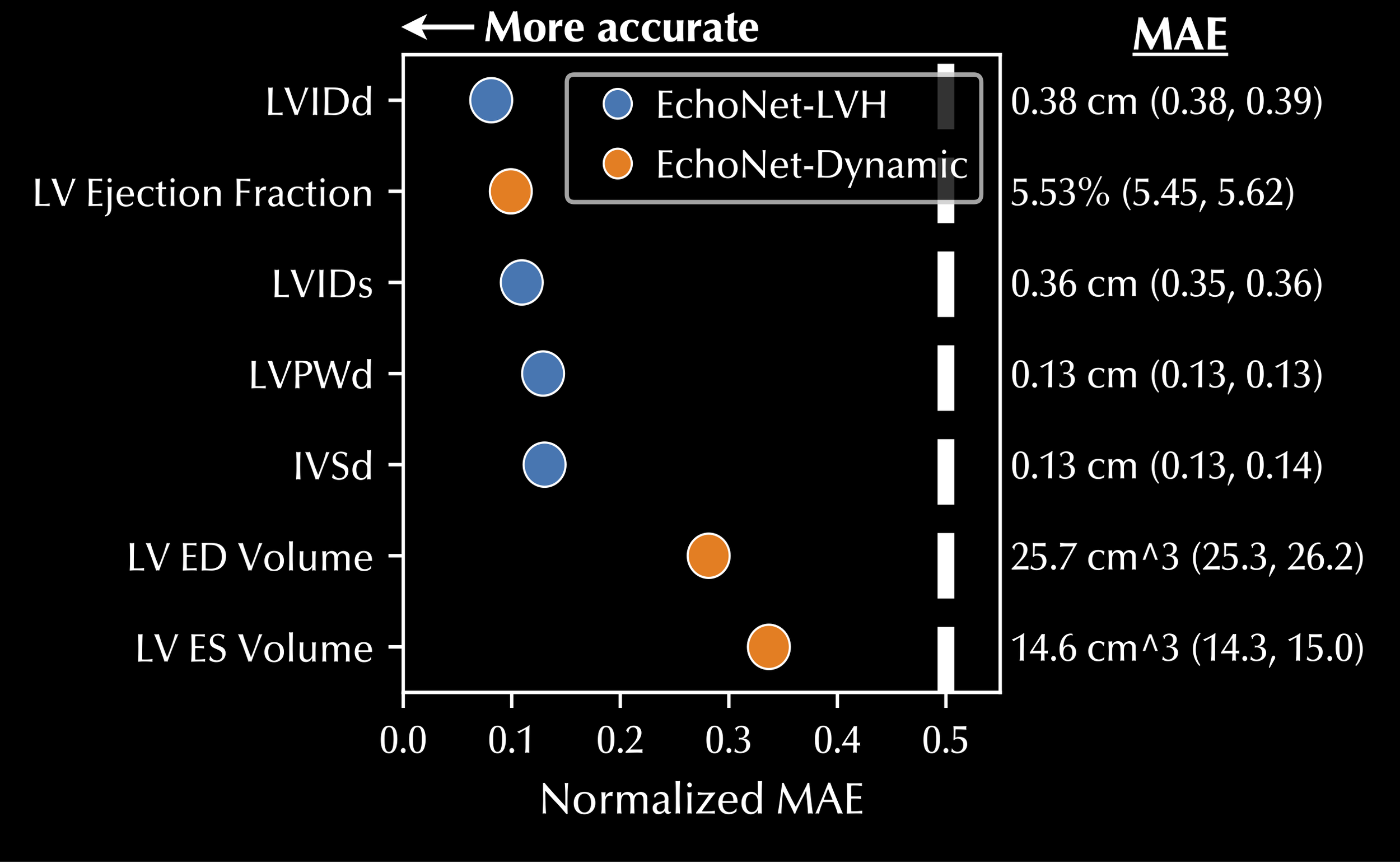

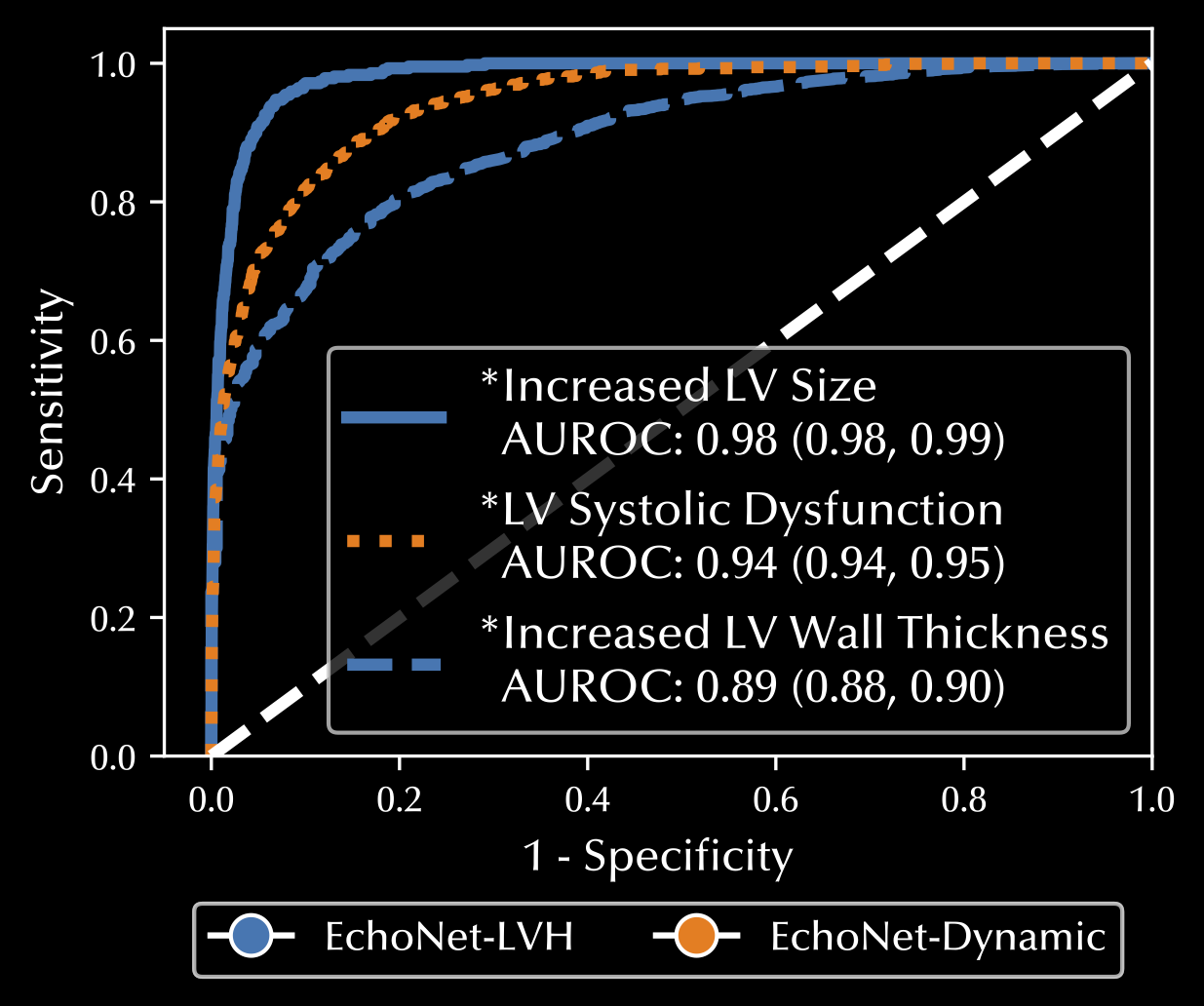

Classification (left) and regression (right) performance of PanEcho in two external test cohorts, EchoNet-LVH (blue) and EchoNet-Dynamic (orange). * = moderate or greater

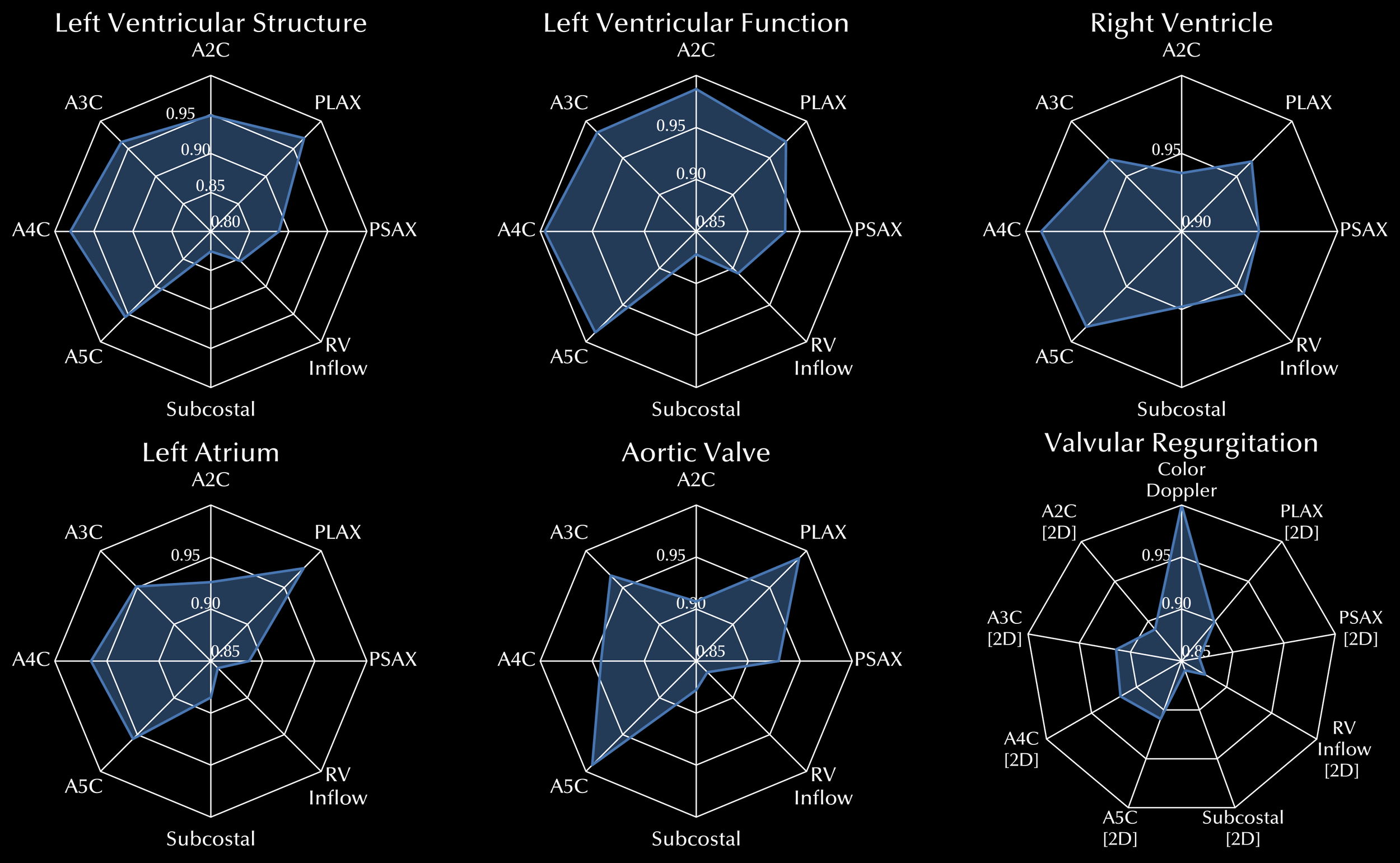

View Importance

PanEcho naturally identifies which views are most relevant to each aspect of cardiovascular health.

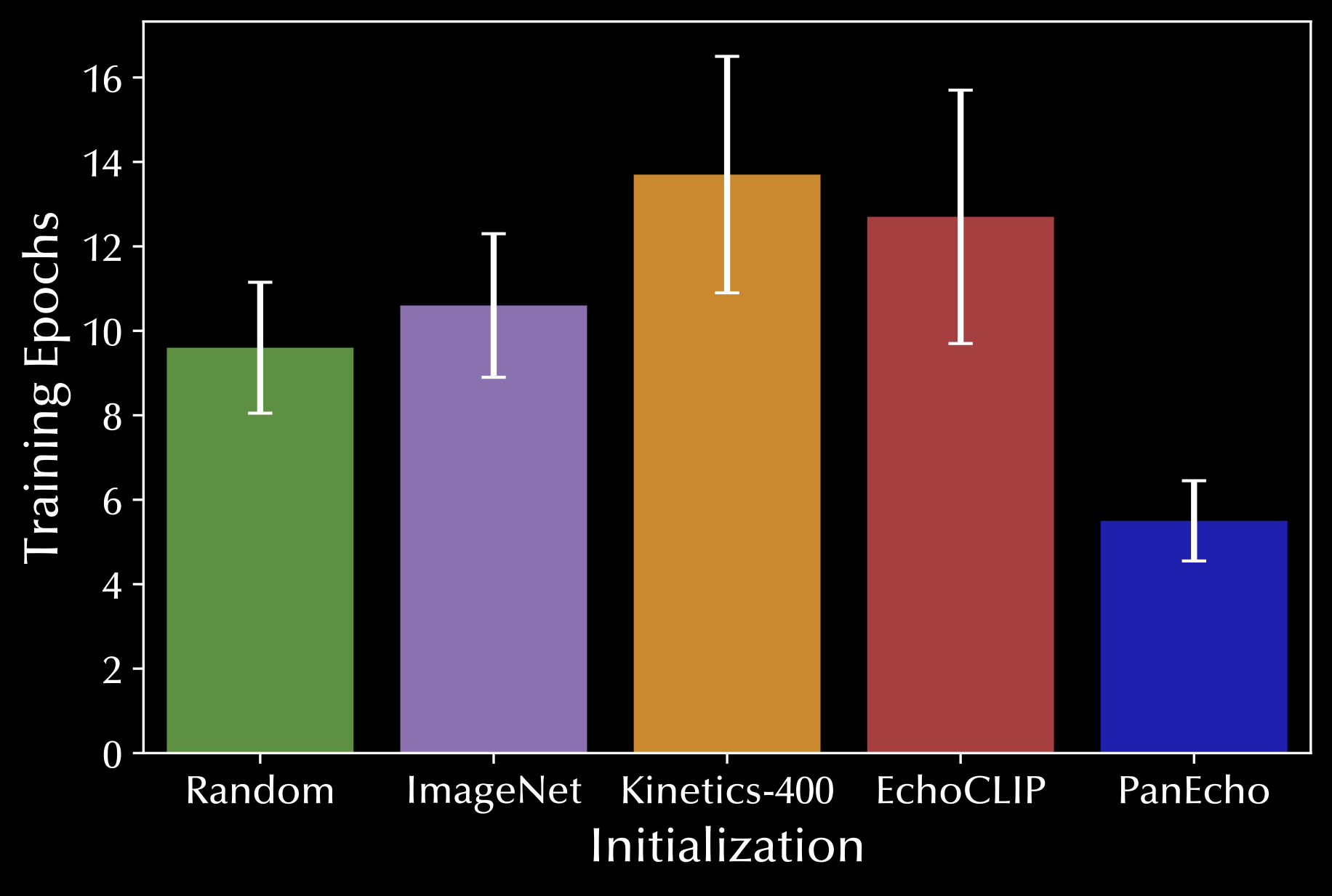

Transfer Learning Capabilities

PanEcho can be used as a foundation model due to its exceptional knowledge transfer capabilities. When fine-tuned for LV ejection fraction estimation in an out-of-domain pediatric population, PanEcho pretraining outperforms other transfer learning approaches by accuracy (left) and efficiency (right).

Model Usage

You can directly use PanEcho through PyTorch Hub:

import torch

# Import PanEcho

model = torch.hub.load('CarDS-Yale/PanEcho', 'PanEcho', force_reload=True)

# Demo inference on random video input

x = torch.rand(1, 3, 16, 112, 112) print(model(x))

Input: PyTorch Tensor of shape (batch_size, 3, 16, 112, 112) containing a 16-frame echocardiogram video clip at 112 x 112 resolution that has been ImageNet-normalized. See our data loader for implementation details, though you are free to preprocess however you like.

Output: Dictionary whose keys are the tasks (see here for breakdown of all tasks) and values are the predictions. Note that binary classification tasks will need a sigmoid activation applied, and multi-class classification tasks will need a softmax activation applied.

Citation

@article{holste2024panecho,

title={PanEcho: Complete AI-enabled echocardiography interpretation with multi-task deep learning},

author={Holste, Gregory and Oikonomou, Evangelos K and Wang, Zhangyang and Khera, Rohan},

journal={medRxiv},

pages={2024--11},

year={2024},

publisher={Cold Spring Harbor Laboratory Press}

}